- [Dec 2025]: I have successfully defended my PhD dissertation, “Towards Multimodal Foundation Models for Human-Centered Robot Behavior” at Korea University.

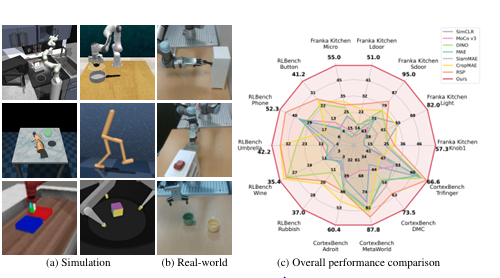

- [Sep 2025]: Our paper about visual representation learning got accepted to NeurIPS 2025.

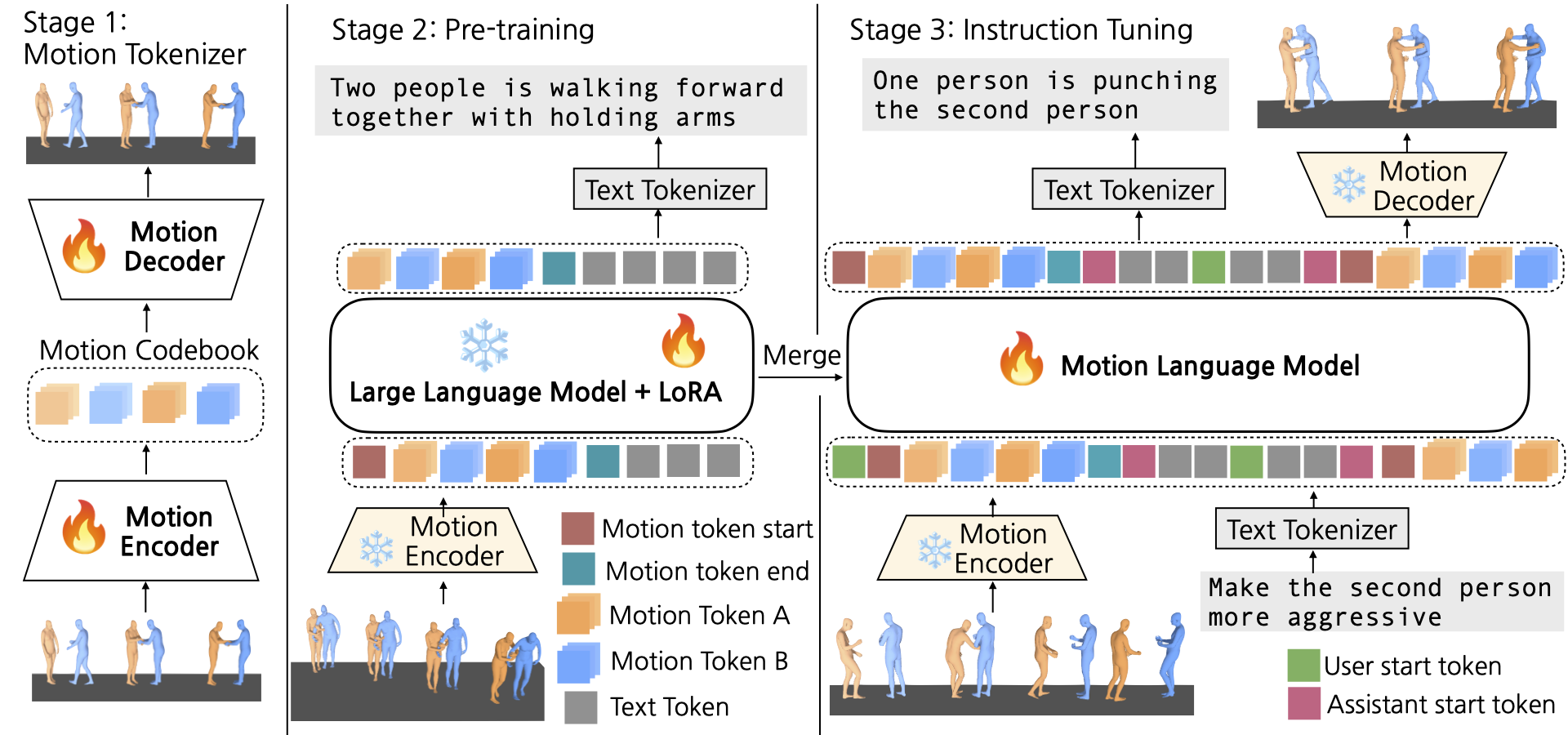

- [May 2025]: Our paper about interactive motion-language model got accepted to ICCV 2025.

- [Feburary 2025]: I am presenting our work about human-robot interaction in Korea Robotics Society Annual Conference (KRoC) as invited speaker in flagship conference session.

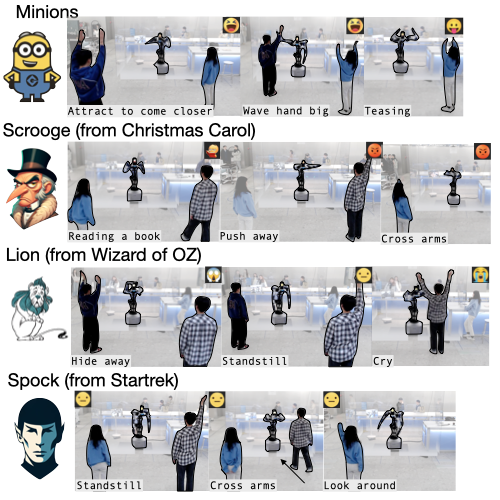

- [July 2024]: Our paper about persona-driven human robot interaction has been accepted to Robotics and Automation letters! We are looking forward to presenting our work at ICRA 2025.

- [May 2024]: Our paper about socially aware navigation has been accepted to RO-MAN 2024.

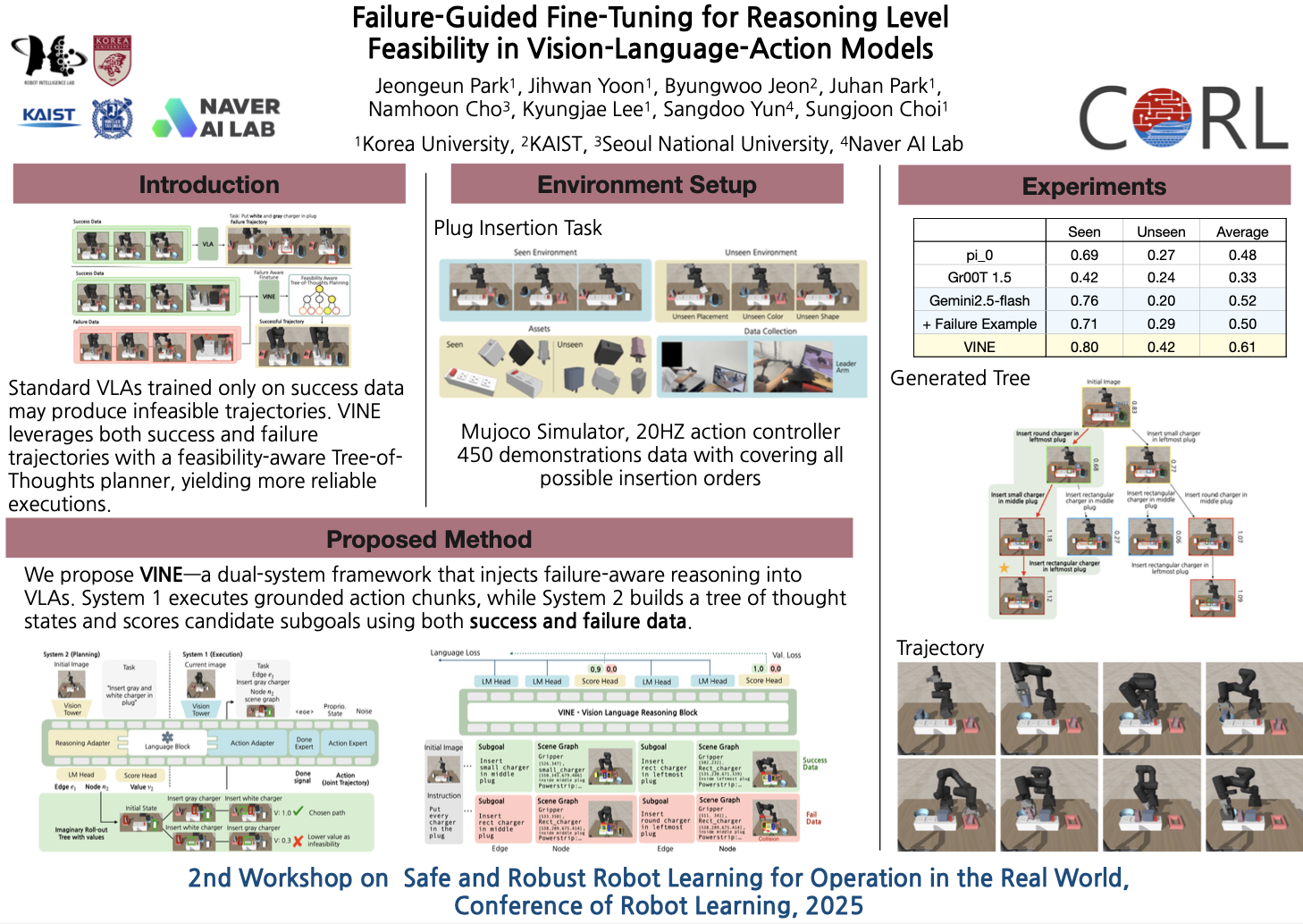

- [April 2024 - October 2024]: I have started internship at NAVER Cloud AI Lab, advised by Sangdoo Yun.

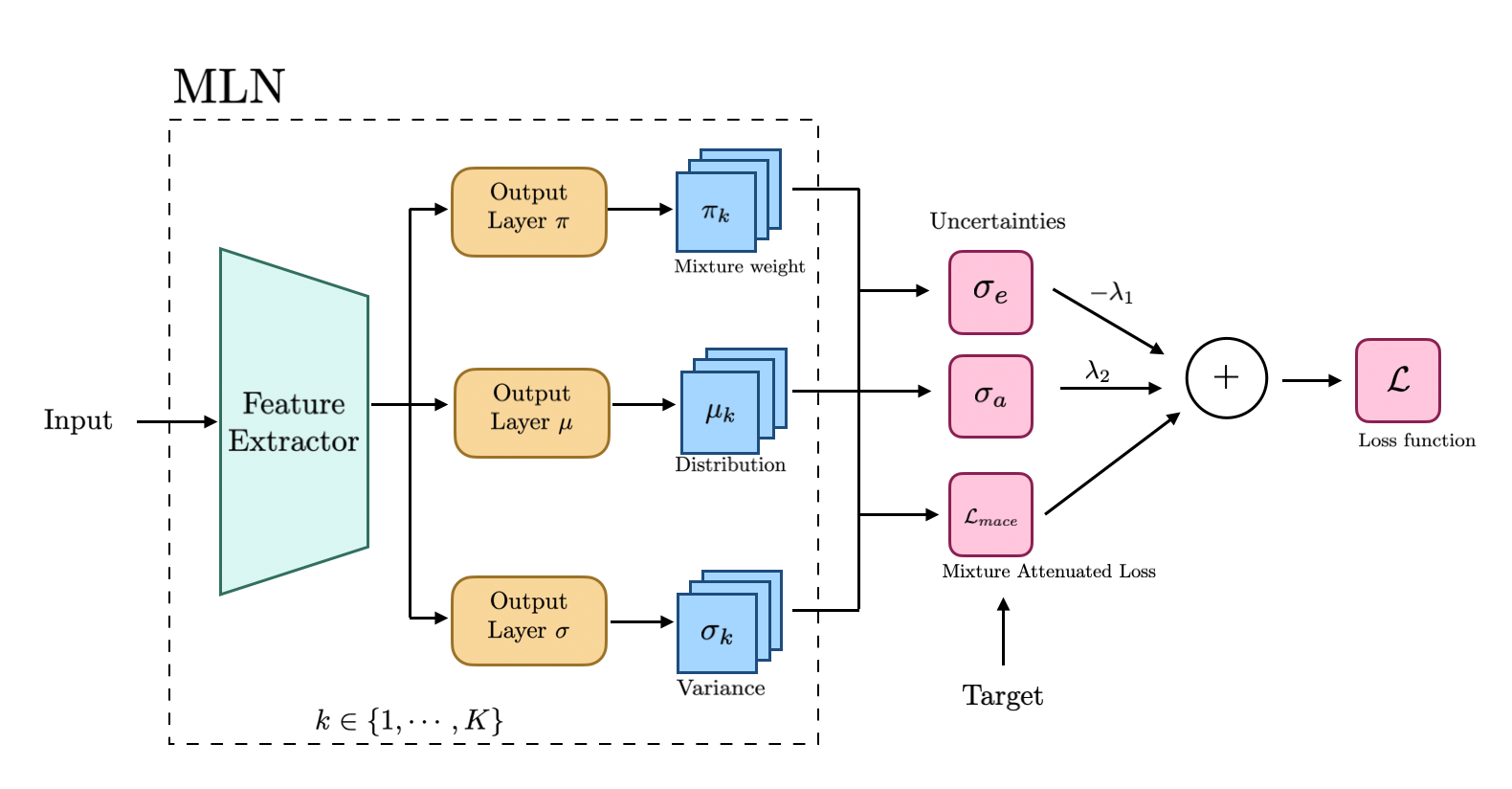

- [Feburary 2024]: I am presenting our work about uncertainty estimation in Korea Robotics Society Annual Conference (KRoC) as invited speaker in flagship conference session.

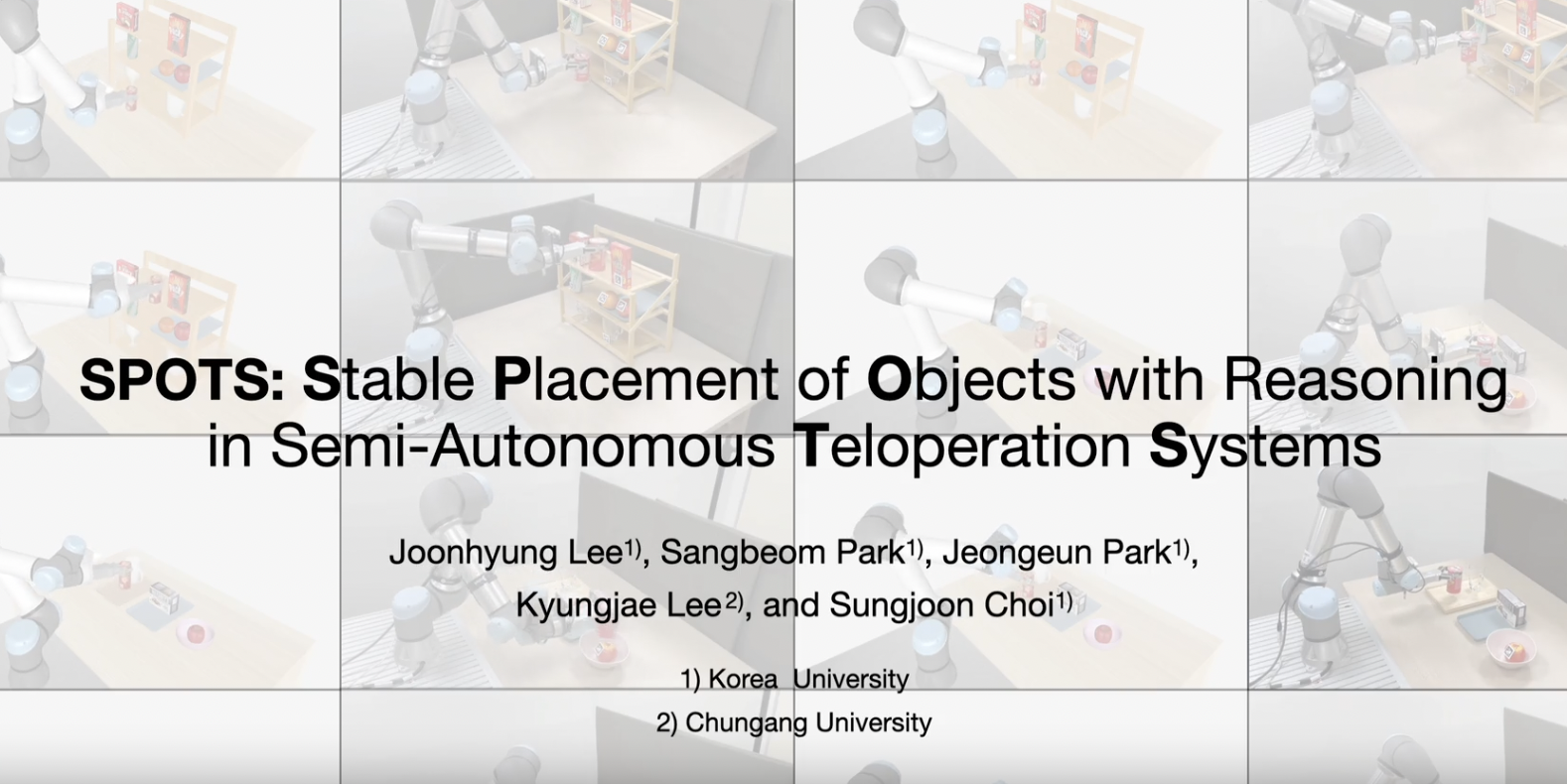

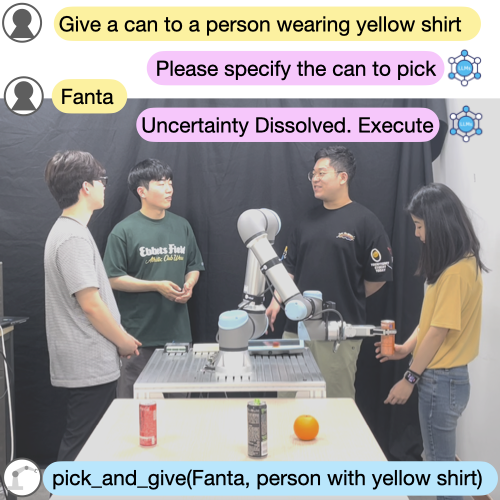

- [January 2024]: Our paper about semi-autonomous teleoperation with physical simulators and large language models has been accepted to ICRA 2024!

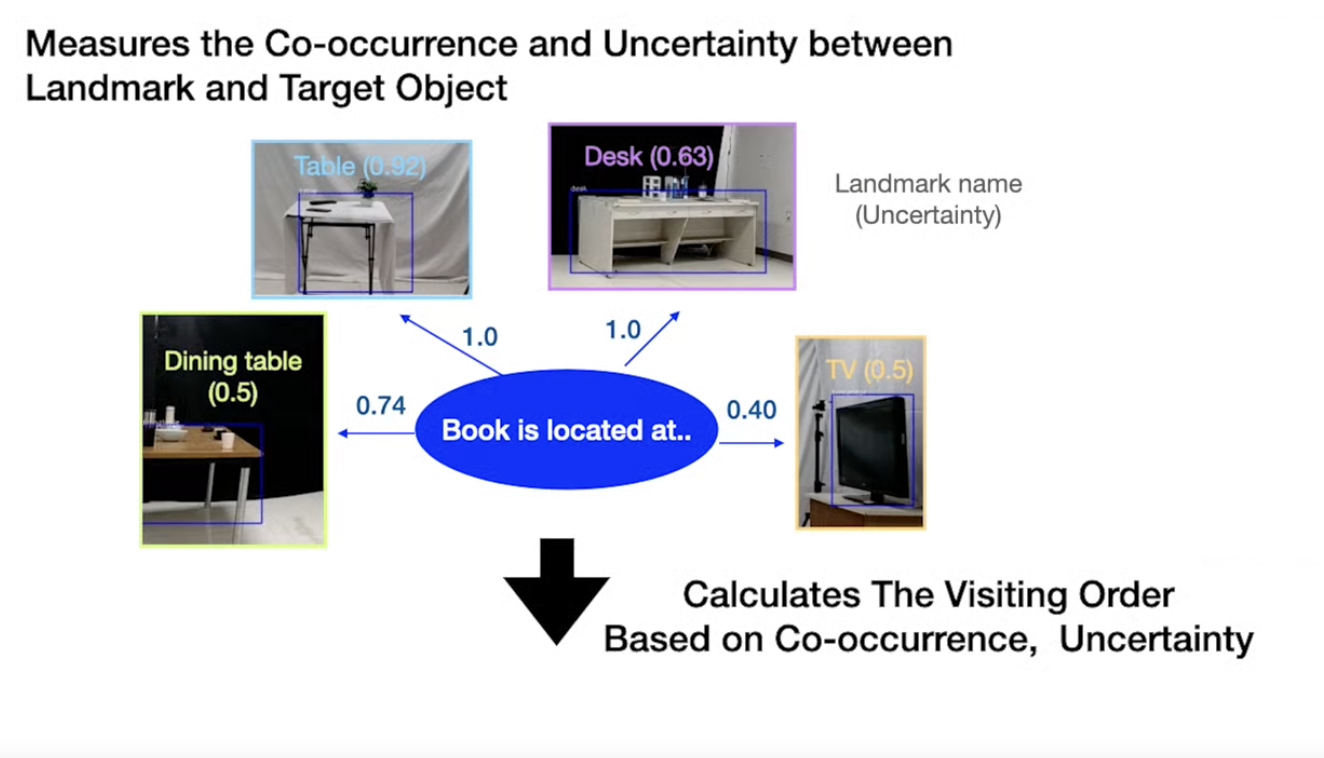

- [November 2023]: Our paper about uncertainty estimation from large language models has been accepted to Robotics and Automation letters! We are looking forward to presenting our work at ICRA 2024.

- [Feburary 2023]: I am presenting our work about vision-based navigation at NAVER Tech Talk.

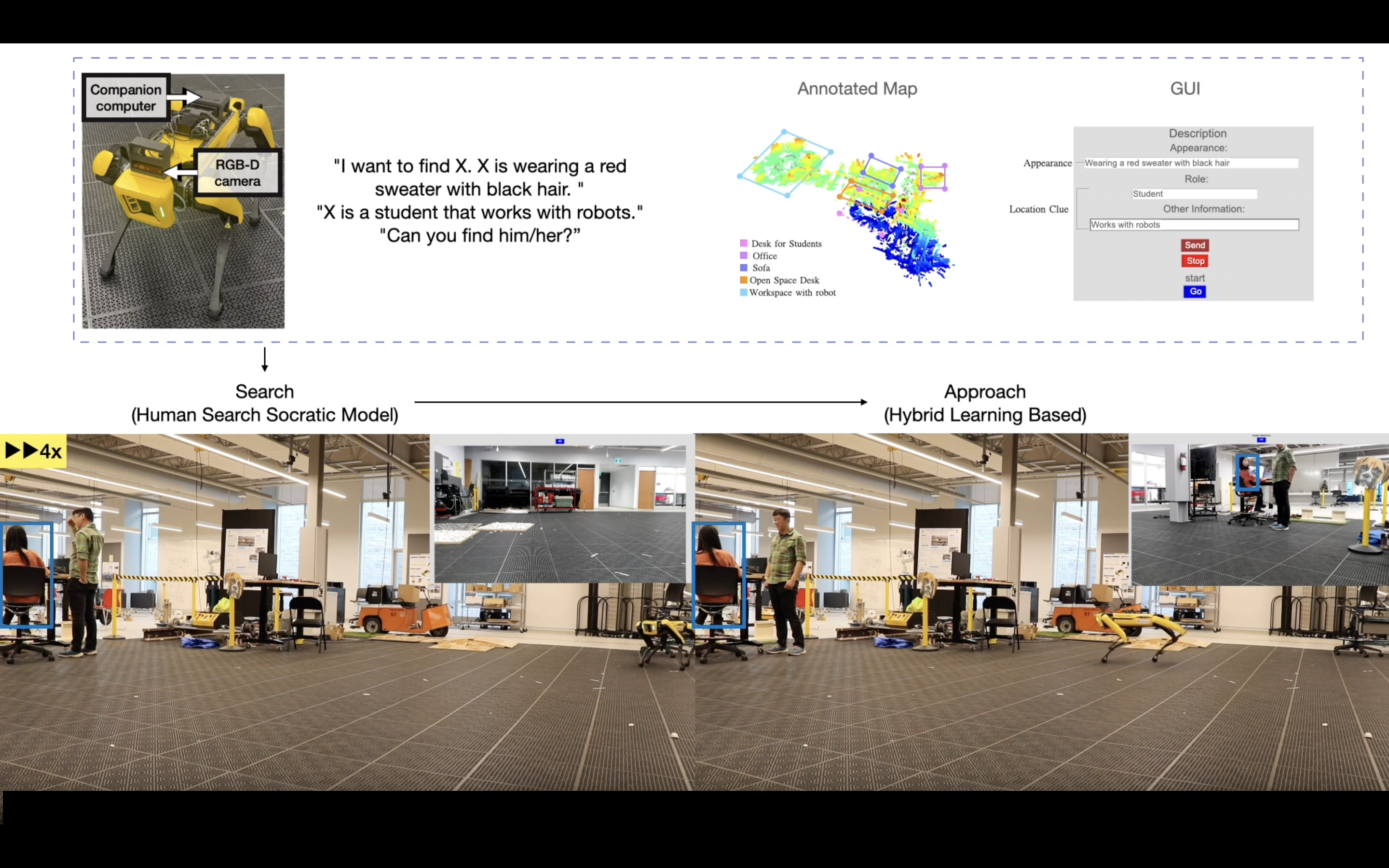

- [January 2023]: Our paper about active visual search has been accepted to ICRA 2023.

- [September 2022 - December 2022]: I am heading to Queens University, Canada as visiting researcher, advised by Matthew Pan. This program is funded by Mitacs Globalink Research Award to Canada.

- [May 2023]: Our paper about abnormal driving dataset has been accepted to IROS 2022.

- [January 2022]: Our paper about semi-autonomous teleoperation for non-prehensile manipulations has been accepted to ICRA 2022.

Hello, I'm Jeongeun Park

I am a Ph.D student at Korea Unversity, where I work with Sungjoon Choi on artificial inteligence and robotics. During Ph.D, I have also spent time at NAVER AI Lab (Korea) and Ingenuity Labs (Canada).

I’m passionate about how robots can assist people in everyday life by collaborating and interacting naturally. In particular, I aim to investigate how foundation models can enable robots to understand, anticipate, and respond to human needs, making day-to-day tasks smoother and more intuitive.